NVIDIA announced on Aug. 13th breakthroughs in language understanding that allows businesses to engage more naturally with customers using real-time conversation AI.

NVIDIA’s AI platform is the first to train one of the most advanced AI language models — BERT — in less than an hour and complete AI inference in just over 2 milliseconds. The groundbreaking level of performance makes it possible for developers to use state-of-the-art language understanding for large-scale applications they can make available to hundreds of millions of consumers worldwide. Read the full press release from NVIDIA.

Analyst Take: For the longest time, the fundamental belief has been that GPUs are a great technology for AI Training while CPUs are the vehicle for inference.

This announcement from NVIDIA opens the doors for an important discussion among analysts, enterprises and consultants that are developing short and long term road maps for leveraging massive volumes of data to be leveraged for inference.

In this particular announcement, NVIDIA focuses on one of the most important opportunities for inference, Conversational AI.

While I’ve been an outspoken advocate for chatbots as a vehicle to enhance customer experience and employee engagement, but to date, much of what we experience with AI enabled chat is very static and discrete; ask one question, get one answer (not always a good one), and that is the end of the action.

This particular experience has led some customers to become somewhat disillusioned by AI powered assistants and chat technologies. It doesn’t reflect the way people want to engage and it is extremely frustrating when two connected thoughts are unable to be processed concurrently. For instance:

ME: What time is my flight to San Francisco today?

AI: Your flight on American Airlines is scheduled for 10:07.

Great, I asked, and the assistant was able to quickly reference my calendar and give me the information I sought.

But if I wanted to know the weather in San Francisco, most chatbots would require me to specifically ask that question. However that isn’t how we would converse. Most likely I would follow on with a question like…

Me: Ok, thanks, what is the weather going to be when I arrive?

AI: Gives me the weather where I am. (If it can even contextualize that question)

Instead, I would have to start over like a brand new conversation to ask about the weather in San Francisco, to which it has no recollection that I just asked about my flight.

So to make a long story long, that is what we are dealing with, but what the advancements in inference that NVIDIA is touting could potentially enable is conversational that can handle the real world turns of a conversation first making connections between two interdependent thoughts, but in time even being able to make less obvious connections. (Think adding dinner reservations or booking a hotel or scheduling a meeting with a colleague in your future location).

Standout Announcements

In terms of the announcement itself, a few things caught my eye regarding GPUs impact on AI Inference.

- NVIDIA GPUs have smashed the 10 millisecond threshold for inference on BERT by over 78 percent coming in at 2.2ms. It’s worth noting many optimized CPUs coming in at more like 40ms. To fully appreciate 2ms, in the single flap of a honey bee’s wings, NVIDIA’s GPUs will perform 2 inferences. Another good analogy would be 100 inferences in the time it takes for a single human heart beat.

- The size of the model that NVIDIA is building for developers to leverage in their software is massive, which is critical for NLP and conversational AI. The company noted 8.3 billion parameters, which is 24x the seize of BERT-Large ( a very common model used for similar applications

- Microsoft, which is one of the most outspoken players and a market leader in the conversational AI space is using NVIDIA GPUs for inference and they are touting some promising figures including 2x reduction in latency and 5x throughput when they run inference on Azure NVIDIA GPUs versus a CPU-based platform. As of now this optimization has been leveraged for Bing, but I can easily see Microsoft expanding use cases for their virtual assistant and chatbot capabilities across their portfolio.

The Final Word

I believe we have a fascinating development going on as NVIDIA is opening up a discussion around GPU technology and its future influence on AI. While training has certainly been its bread and butter, it appears there is a compelling case for GPUs to play a major role in inference and a rapidly approaching future where conversational AI actually resembles having a conversation.

Read more analysis from Futurum:

HPE Acquires MapR, Furthering Commitment to AI and Analytics

Apple Woes and Samsung Grows: Analyzing Q2 European Mobile Shipments

Check Point Exposes Massive Apple Vulnerability Impacting 1.4 Billion Devices

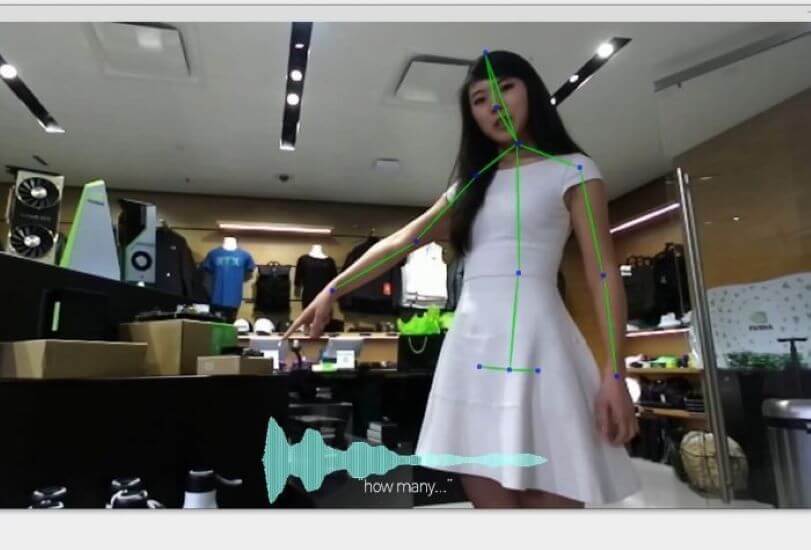

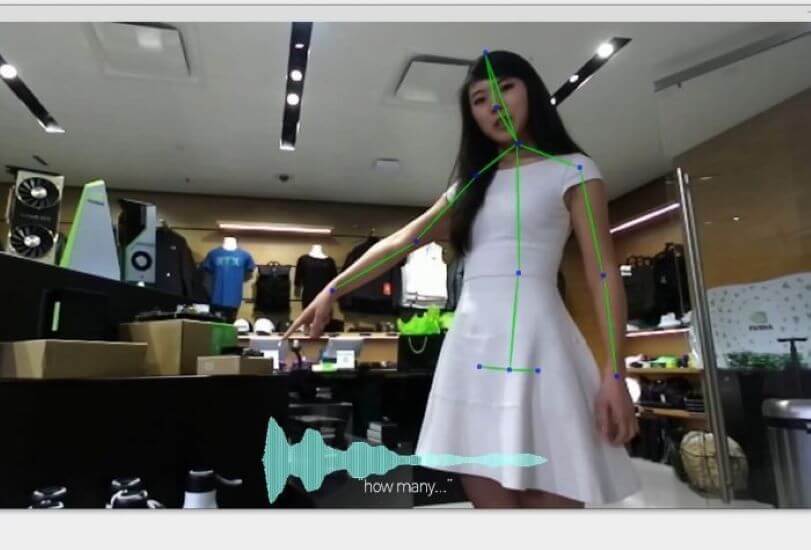

Image: NVIDIA

The original version of this article was first published on Futurum Research.

Daniel Newman is the Principal Analyst of Futurum Research and the CEO of Broadsuite Media Group. Living his life at the intersection of people and technology, Daniel works with the world’s largest technology brands exploring Digital Transformation and how it is influencing the enterprise. From Big Data to IoT to Cloud Computing, Newman makes the connections between business, people and tech that are required for companies to benefit most from their technology projects, which leads to his ideas regularly being cited in CIO.Com, CIO Review and hundreds of other sites across the world. A 5x Best Selling Author including his most recent “Building Dragons: Digital Transformation in the Experience Economy,” Daniel is also a Forbes, Entrepreneur and Huffington Post Contributor. MBA and Graduate Adjunct Professor, Daniel Newman is a Chicago Native and his speaking takes him around the world each year as he shares his vision of the role technology will play in our future.