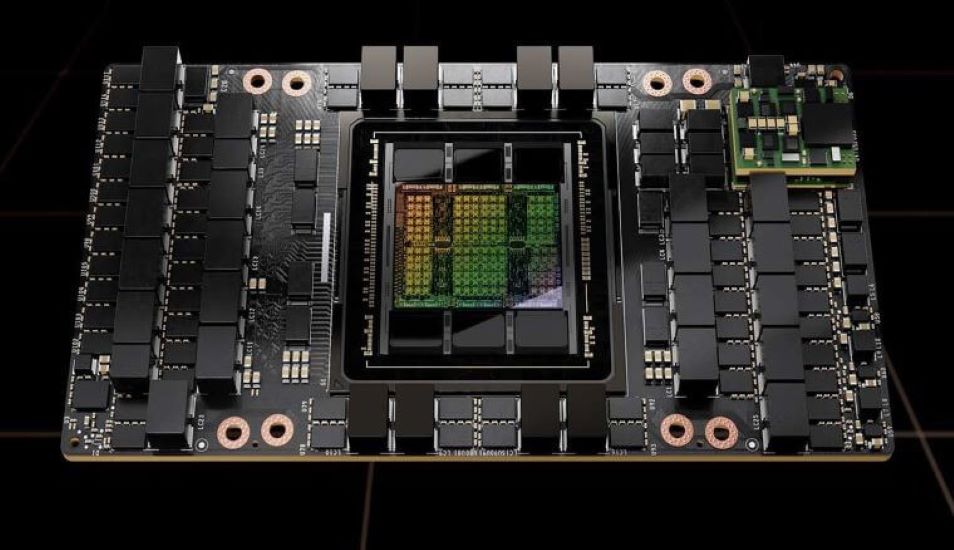

The News: NVIDIA H100 Tensor Core GPUs, the company’s newest and most powerful chips that are used for AI and machine learning, just set new performance records in the latest round of MLPerf Inference v2.1 industry-standard benchmark tests for AI inferencing performance. In the newest round of MLPerf Inference v2.1 tests, which produce benchmarks for data center and edge uses, the NVIDIA H100 GPUs delivered up to 4.5x more performance than NVIDIA’s previous A100 GPUs. Read the full NVIDIA blog post on its latest MLPerf v2.1 AI performance test results.

NVIDIA H100 MLPerf 2.1 AI Performance Results Are Impressive

Analyst Take: With its latest NVIDIA H100 GPUs, NVIDIA again establishes itself as the company to beat when it comes to GPU performance for AI inferencing, based on the newest MLPerf Inference v2.1 benchmarks from the open source engineering consortium, MLCommons.

I realize the repetitive nature of my commentary here, but the reality is every time we write about the latest MLPerf testing results, NVIDIA is at the top of the results mix with its then-latest powerful GPUs. And based on NVIDIA’s continuing technical leadership in GPUs used for AI and ML, this should not be a surprise.

What is also impressive is that unlike competitors, NVIDIA enters its GPUs in each of the MLPerf data center and edge computing tests, rather than just hand-picking the tests where its GPUs will perform best. This gives a clear picture of the performance of NVIDIA GPUs and how they stack up against competitors in any category.

The MLPerf v2.1 test results are important in the industry because they provide an excellent way to evaluate AI inferencing performance when comparing GPUs, placing each one head-to-head while starting from an equal footing. The MLPerf testing is a respected yardstick where each vendor can submit their chips and be compared to establish real-world performance leadership.

In this latest round of MLPerf benchmark testing, the NVIDIA H100 Tensor Core GPUs set world records in inference on all workloads, delivering up to 4.5x more performance than previous-generation NVIDIA A100 Ampere GPUs. That is impressive and highlights the H100 GPUs as the chip to use to get the highest performance in AI inferencing using advanced AI models.

These were the first MLPerf tests to include the NVIDIA H100 GPUs, which are slated to be available later in 2022.

Notably, the NVIDIA H100 GPUs particularly excelled on the MLPerf benchmark test involving the BERT model for natural language processing, which is one of the largest and most performance-hungry of the MLPerf AI models.

How the NVIDIA H100 Helps Boost MLPerf Results

The dramatic performance boost for the NVIDIA H100 GPUs in the MLPerf Inference v2.1 benchmark tests comes through rich design and engineering based on decades of deep experience in GPU architecture. The H100 GPUs include 80 billion transistors and are built on a TSMC 4nm process. The NVIDIA H100 also includes a new Transformer Engine that is as much as 6x faster than previous versions, as well as a highly scalable, super-fast NVIDIA NVLink interconnect.

The NVIDIA H100 Tensor core GPU is the company’s first GPU based on its latest NVIDIA Hopper accelerated computing platform architecture that was unveiled in 2021 to replace its now three-year-old Ampere architecture.

The latest MLPerf Inference v.2.1 benchmarks H100 raised the bar in per-accelerator performance across the six neural networks that were included in the latest benchmark round. The H100 results performed admirably in both throughput and speed in separate server and offline scenarios in the benchmark testing in data center and edge scenarios,

MLPerf is a consortium of AI leaders from academia, research labs, and industry which builds what it calls “fair and useful benchmarks” that aim to produce unbiased evaluations of training and inference performance for hardware, software, and services under prescribed conditions. MLPerf does its testing at regular intervals and adds new workloads as needed to represent the state of the art in AI, according to the group. The benchmark suites are open source and peer reviewed.

The latest MLPerf Inference v2.1 tests are built to stress machine learning models, software, and hardware, while also monitoring energy consumption.

Included in this round of testing for datacenter and edge systems were entries from NVIDIA and a wide range of other hardware vendors, including Alibaba, ASUSTeK, Azure, Biren, Dell, Fujitsu, GIGABYTE, H3C, HPE, Inspur, Intel, Krai, Lenovo, Moffett, Nettrix, Neural Magic, OctoML, Qualcomm Technologies, Inc., SAPEON, and Supermicro.

Earlier NVIDIA A100 GPUs Also Continue Their Performance Leadership

The MLPerf Inference v2.1 benchmark results also showed continuing AI inferencing performance leadership in today’s marketplace for NVIDIA’s previously established A100 GPUs. In the tests, NVIDIA A100 GPUs won more tests in data center and edge computing categories and scenarios than any other competitors. NVIDIA A100 performance has been increased by 6x in the MLPerf tests since the GPUs were first tested by the organization in July of 2020.

NVIDIA MLPerf v2.1 Performance Overview

In the ultra-competitive world of AI inferencing, NVIDIA continues to display its engineering prowess and technological expertise when it comes to producing hardware and software that is built to deliver in the marketplace and on MLPerf benchmark test results.

And the latest MLPerf Inference v2.1 results show that NVIDIA is maintaining that position of competitiveness and leadership in a field that is always demanding higher performance and broader capabilities.

We believe that as NVIDIA continues to focus on the future of AI, it will continue to play a leadership role in delivering the promise and performance of AI to the world.

NVIDIA continues to be an exciting company to watch in the AI marketplace around the globe and it will be fascinating to track its next moves in this always evolving field.

Disclosure: Futurum Research is a research and advisory firm that engages or has engaged in research, analysis, and advisory services with many technology companies, including those mentioned in this article. The author does not hold any equity positions with any company mentioned in this article.

Analysis and opinions expressed herein are specific to the analyst individually and data and other information that might have been provided for validation, not those of Futurum Research as a whole.

Other insights from Futurum Research:

NVIDIA Q2 Revenue Up 3% YoY as Gaming Slows Post-Pandemic

NVIDIA Omniverse Gets Expanded Tools, Frameworks, Plug-ins

Image Credit: NVIDIA

The original version of this article was first published on Futurum Research.

Todd is an experienced Analyst with over 21 years of experience as a technology journalist in a wide variety of tech focused areas.