The News: This week, NVIDIA held its annual GTC Conference, where CEO Jensen Huang delivered updates from his famous kitchen at breakneck speed. For a full rundown of announcements check out NVIDIA’s newsroom.

Analyst Take: GTC is always a lock to have a flurry of announcements that cross its core segments from automotive to visualization to gaming to datacenter. This year was no different, and covering the entire event in a single research note would be nearly impossible.

Over the past couple of years, no component of NVIDIA’s business has grown faster than its datacenter business. As AI, ML, and data science continue to serve as a focal point for enterprise growth, NVIDIA’s solutions encompass the full stack of requirements to deliver on the promise of AI and with this year’s GTC, the company rolled out updates and new solutions that will further entrench its contributions to enterprise AI.

For this research note, I am focusing in on three HPC inspired announcements that I feel were particularly noteworthy.

The DGX SuperPOD and DCaaS DGX Station

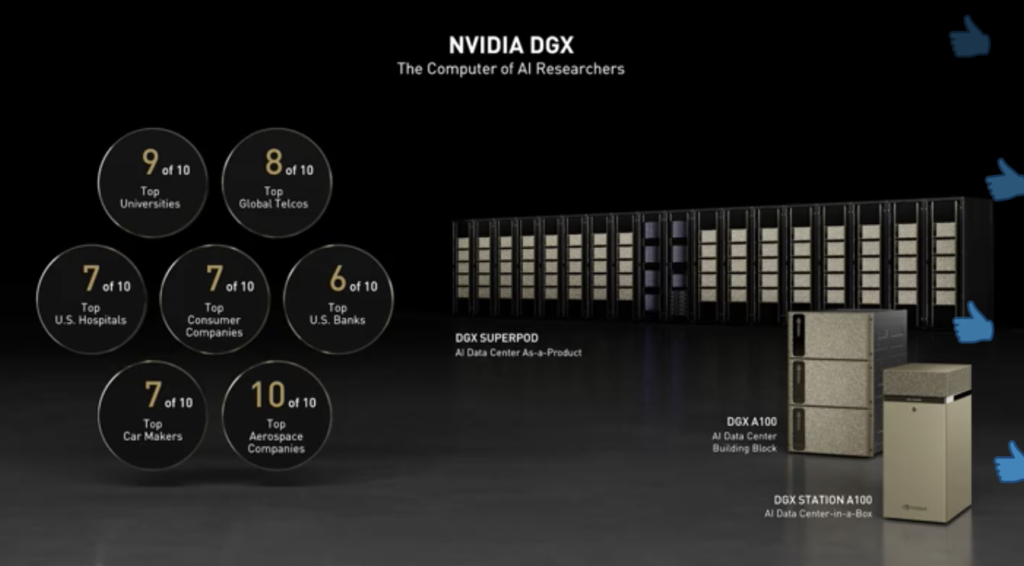

Nvidia announced several updates to its DGX lineup including the next generation of its DGX SuperPOD, a system which comprised of 20 or more DGX A100 along with Nvidia’s InfiniBand HDR networking. The latest superPOD is cloud-native and multi-tenant. I appreciated the incorporation of its Nvidia’s BlueField-2 DPUs to offload, accelerate and isolate users’ data, allowing more resources for compute and less for infrastructure related workloads. This announcement has a quick horizon to GA as the new SuperPOD is set to be available through NVIDIA partners in the 2nd quarter.

Another interesting announcement around the DGX SuperPOD was the introduction of what the company is calling ‘Base Command’ to control AI training and operations on DGX SuperPOD infrastructure. With Base Command, multiple users and IT teams are able to securely access, share and operate the infrastructure. Base Command will also be available in Q2.

Nvidia also announced a new subscription offering that I’m affectionately calling AI DCaaS for the DGX Station A100, which is simply a desktop model of an AI computer. This program enables NVIDIA to legitimately drop a DGX at your doorstep that you can use for specific applications for a period of time on a subscription basis. When done, you pack it up and return it. The new offering should make it easier for organizations to work on AI development outside of the data center. Subscriptions start at a list price of $9,000 per month. As a bit of perspective, the DGX Stations have a starting price of $149,000, while the DGX SuperPod starts at $7 million and scales up to $60 million. This is a clever little add to the lineup, and I see this being a popular option as it appeals to the OpEx crowd, but also serves as a nice gateway to utilize computing at this level at a more palatable price point.

Grace: NVIDIA’s First CPU for the Data

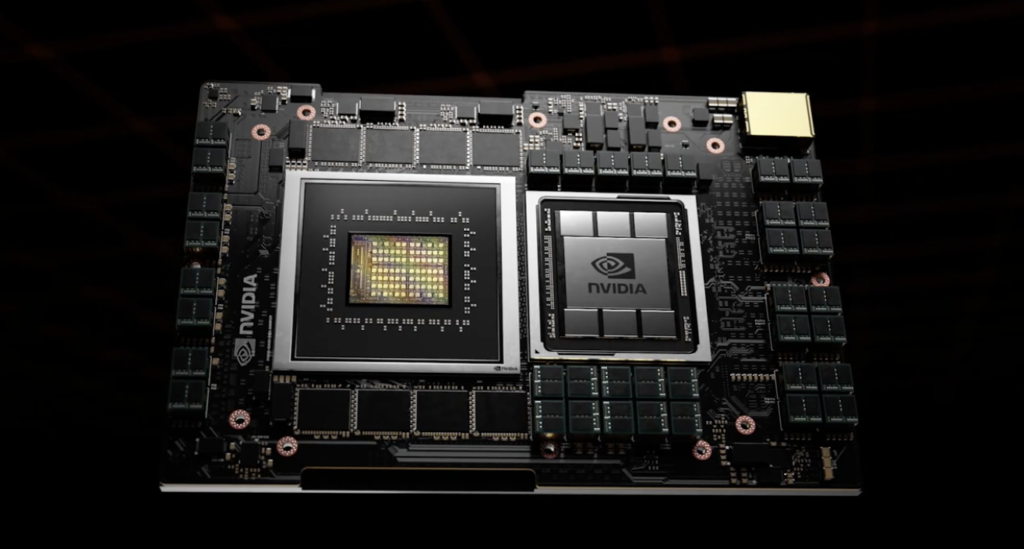

Grace, which is slated to be generally available in 2023, marked a pivotal moment for the company as it is now memorializing its commitment to play in the DC CPU space. Grace, Nvidia’s Arm-based processor, is purpose built for highly complex needs and to power advanced applications that tap into large datasets and models (think beyond a trillion parameters) and rely on ultra-fast compute and robust memory. The most prominent use cases will be around natural language processing, recommender systems and AI supercomputing.

Perhaps the most poignant spec I heard during yesterday’s keynote was that A Grace-based system will be able to train a one trillion parameter natural language processing (NLP) model 10x faster than today’s state-of-the-art Nvidia DGX-based systems, which run on x86 CPUs.

Another important capability of Grace is the sophistication of the interconnects that allows high-speed data transfers at ultra low latency levels. Grace will incorporate Nvidia’s 4th Gen NVLink interconnect technology provides a 900 GB/s connection between the Grace CPU and Nvidia GPUs. This will enable 30x higher aggregate bandwidth compared to today’s leading servers.

Other noteworthy specifications on Grace includes its memory and power handling. The chip uses a LPDDR5x memory subsystem that is set to deliver twice the bandwidth and 10x better energy efficiency compared with DDR4 memory. I was also drawn to the new architecture that NVIDIA is using for Grace, which provides unified cache coherence with a single memory address space. This approach combines the system and HBM GPU memory to improve and simplify programmability.

The company announced that it had its first confirmed customers, which have announced plans to deploy Grace. This includes the Swiss National Supercomputing Centre (CSCS) and the US Department of Energy’s Los Alamos National Laboratory. Both labs plan to launch Grace-powered supercomputers, built by HPE, in 2023.

Overall, the launch of Grace marks an important stake in the ground for NVIDIA. While these do not warrant a direct comparison to most of the datacenter CPU solutions in market today, this does mark an official entry point for an NVIDIA datacenter CPU. Based upon the pedigree of NVIDIA in HPC and at the top end of the market, I expect it to be a success. With the impending Arm deal out there, this won’t be the last offering, and I expect more modest offerings to come in the future based on NVIDIA’s deepening ties to Arm and the growing demand for Arm based CPUs in the datacenter.

Futurum Research provides industry research and analysis. These columns are for educational purposes only and should not be considered in any way investment advice.

Read more analysis from Futurum Research:

SAP Cyberattack Currently Underway Exploits Known Security Vulnerabilities

Microsoft to Buy Nuance, a Voice Recognition AI Leader for $19.7 Billion

Image: NVIDIA

The original version of this article was first published on Futurum Research.

Daniel Newman is the Principal Analyst of Futurum Research and the CEO of Broadsuite Media Group. Living his life at the intersection of people and technology, Daniel works with the world’s largest technology brands exploring Digital Transformation and how it is influencing the enterprise. From Big Data to IoT to Cloud Computing, Newman makes the connections between business, people and tech that are required for companies to benefit most from their technology projects, which leads to his ideas regularly being cited in CIO.Com, CIO Review and hundreds of other sites across the world. A 5x Best Selling Author including his most recent “Building Dragons: Digital Transformation in the Experience Economy,” Daniel is also a Forbes, Entrepreneur and Huffington Post Contributor. MBA and Graduate Adjunct Professor, Daniel Newman is a Chicago Native and his speaking takes him around the world each year as he shares his vision of the role technology will play in our future.